Debug Agent Skills Guide: From "Guessing Code" to Runtime Reality

In an era where AI-assisted programming is becoming increasingly prevalent, we have grown accustomed to using AI to write code. However, when it comes to the debugging process, the experience is often less than satisfactory. AI frequently turns into a "guessing machine," constantly modifying code to test its hypotheses. This not only consumes a significant amount of tokens but also pollutes the codebase.

The solution lies in a specialized capability known as the Debug Agent Skill. Designed to address AI's suboptimal performance on complex tasks, these skills bridge the gap between static code and dynamic execution. In the face of massive code generation, we need an automated Debug Agent Skill equipped with runtime context and logical reasoning capabilities.

I. Pain Point Analysis: Why Traditional AI Lacks Context

First, we need to understand why AI debugging often gets stuck.

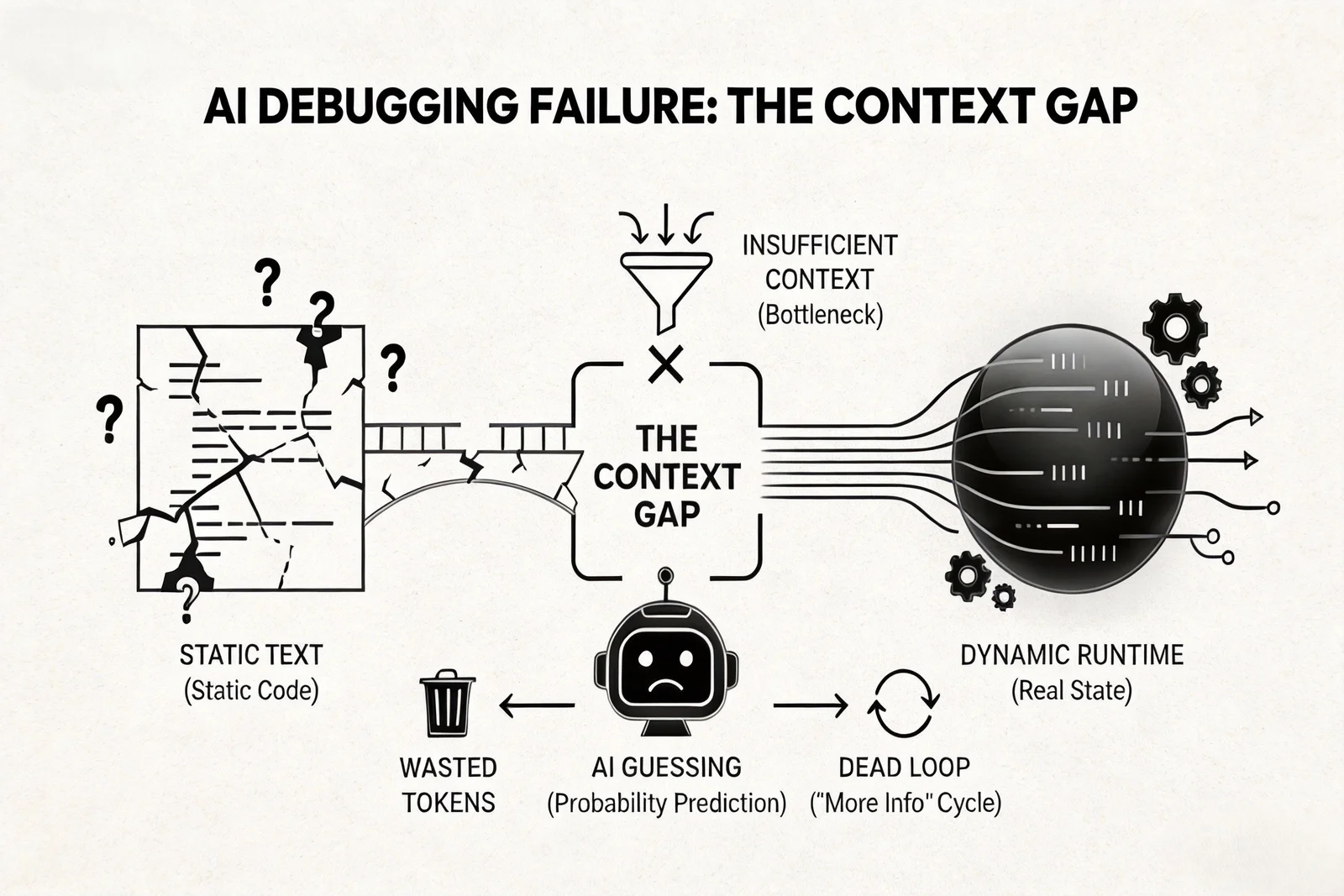

Unlike human engineers, AI cannot reason about the entire system state. It heavily relies on the immediate information you provide: error logs, recent code changes, partial outputs, and chat history. This lack of Runtime Context is the critical failure point.

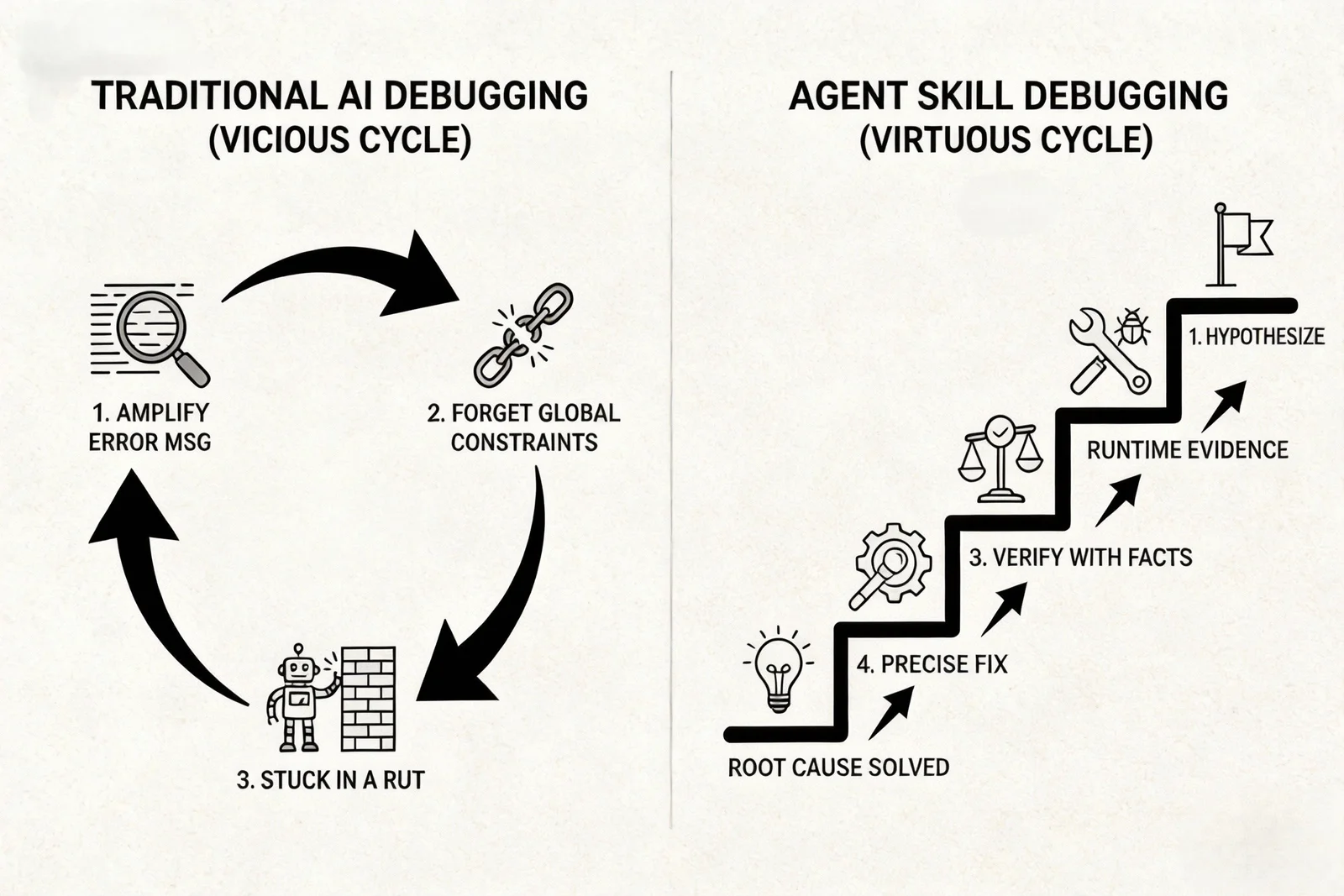

During each debugging iteration, three things typically happen:

- Error Messages are Amplified: The AI focuses on failure signals (symptoms) rather than the Root Cause.

- Global Constraints are Forgotten: Local fixes break system-level design.

- Exploration Turns into Tunnel Vision: The model repeatedly refines the same flawed idea, getting stuck in a "fix loop."

This leads to a familiar scenario: the AI appears confident, but the code produces hallucinations rather than solutions.

The fundamental reason for AI debugging failures is the chasm between "static text" and "dynamic runtime." AI can only make probabilistic predictions based on static code. Without access to the true state of the program via tools like MCP (Model Context Protocol) or log injection, it naturally relies on blind guessing.

II. Underlying Principles: Giving the Agent a "God's-Eye View"

To solve this dilemma, effective Debug Agent Skills rely on two core principles:

- Injecting Runtime Context: Introducing a "God's-eye view" by capturing real-time execution paths, system states, and causal signals. Instead of debugging based on fragmented prompts, we provide the AI with Runtime Facts.

- Scientific Troubleshooting Loop: Adopting the methodology of "hypothesize, reproduce, validate, and fix." This enables the Agent to evolve from a "code completer" to a logic-driven Reasoning Engine.

III. Design Guide: 4 Key Elements of an Efficient Debug Skill

Agent Skills (popularized by Anthropic and the wider AI ecosystem ) are reusable bundles of "professional methods + SOPs + domain knowledge."

The core challenge of AI debugging is not execution, but judgment and prioritization. To design a Debug Skill that effectively enhances an Agent's capabilities,we must focus on these four aspects:

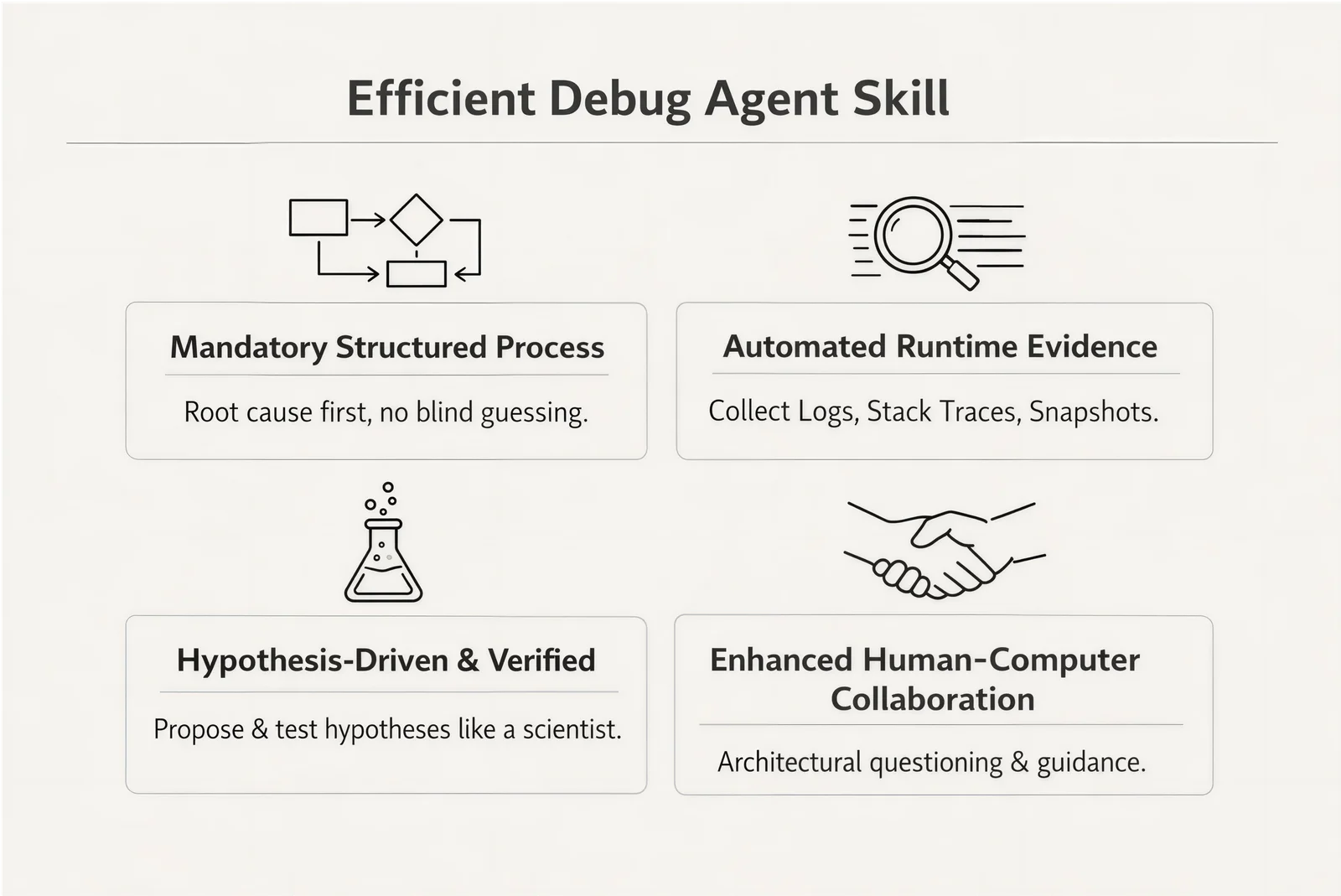

1. Mandatory and Structured Debugging Process

Agents are prone to "trial and error." A Debug Skill must enforce a scientific method:

- Root Cause First Principle: The Agent is not allowed to attempt a fix until the root cause is identified with evidence.

- Multi-stage Iteration: Clear stages for "Hypothesis," "Evidence Collection," "Analysis," and "Verification."

- "Red Flag" Mechanism: Detects anti-patterns (like blind guessing) and forces a strategy reset.

2. Automated Runtime Evidence Collection

A Debug Skill must transform dynamic behavior into data the Agent can understand.

- Log Servers and Instrumentation: Automatically inserting structured logs (e.g., NDJSON) at key code paths.

- Runtime Fact Capture: Integrating protocols (like MCP) to capture stack traces, variable snapshots, and function I/O when errors occur. This acts as a "time machine" for the Agent.

- Multi-language Support: Support for mainstream environments (TypeScript, Python, etc.).

3. Hypothesis-Driven and Evidence-Based Validation

- Mandatory Hypothesis Generation: Requiring 3-5 specific, testable hypotheses before writing code.

- Evidence Referencing: Forcing the Agent to cite specific runtime evidence (e.g., "variable

xis null on line 42") to prevent hallucinations. - Hypothesis State Management: Tracking which hypotheses have been rejected or confirmed.

4. Enhanced Human-Computer Collaboration

- User Input Guidance: Guiding the user to provide high-quality reproduction steps.

- Architecture Questioning: Triggering a pause for architectural discussion if multiple fixes fail.

- Fix Verification: Automatically cleaning up debug instrumentation after the issue is resolved.

IV. Tool Inventory: Top Debug Agent Skills Comparison (2026)

There are several approaches to solving the context gap. Here is a comparison of current market solutions:

| Project Name | Core Logic | Core Strengths | Weaknesses |

|---|---|---|---|

| Systematic-debugging | Modifies source code to insert logs and analyze output (TDD approach). | Strong anti-hallucination; layered diagnostic model. | High interaction friction; lacks automated tool integration. |

| Debug | Sets up a local log server to transform console output into structured JSON. | Independent logging infrastructure; closed-loop verification. | High barrier to entry; highly intrusive to the code. |

| Debugging-strategies | Provides checklists and methodologies (non-intrusive). | High universality; zero intrusiveness. | Manual execution required; high barrier for novices. |

| Debug-mode | Enforces a "root cause first" workflow via prompt engineering. | Strict closed-loop workflow; emphasizes minimal changes. | Severe context window consumption; relies heavily on reproducibility. |

| Syncause Agent Skill | Runtime Context Injection. Solves problems by collecting the true trajectory of the program execution. | Zero-configuration automation; eliminates hallucinations by using Real Data; zero production impact. | Current language support is focused on specific stacks. |

V. Conclusion

The gap between "static text" and "dynamic runtime" is the primary reason why AI struggles with complex debugging. Debug Agent Skills provide the missing link.

They have the advantages of a low barrier to entry, ease of use, token savings, composability, and cross-platform compatibility. Applying them to the field of debugging can upgrade from "spell checking" to "logical reasoning," solving the problem of exceeding context limits through runtime insights and greatly improving our debugging efficiency.

Up Next: We will conduct a deep-dive evaluation of these Debug Skills in our next post.

If you are tired of the AI's "trial and error" approach, consider integrating the Syncause Agent Skill. It takes just 2 minutes to set up, allowing Runtime Facts to become the cornerstone of your AI's reasoning—one less guess, one less round of rework.